I have seen this concept used in Product in the past. And I don't think it's so well widespread in Engineering. Metrics are a delicate and dangerous matter in software development, and the more we understand the domain, the better.

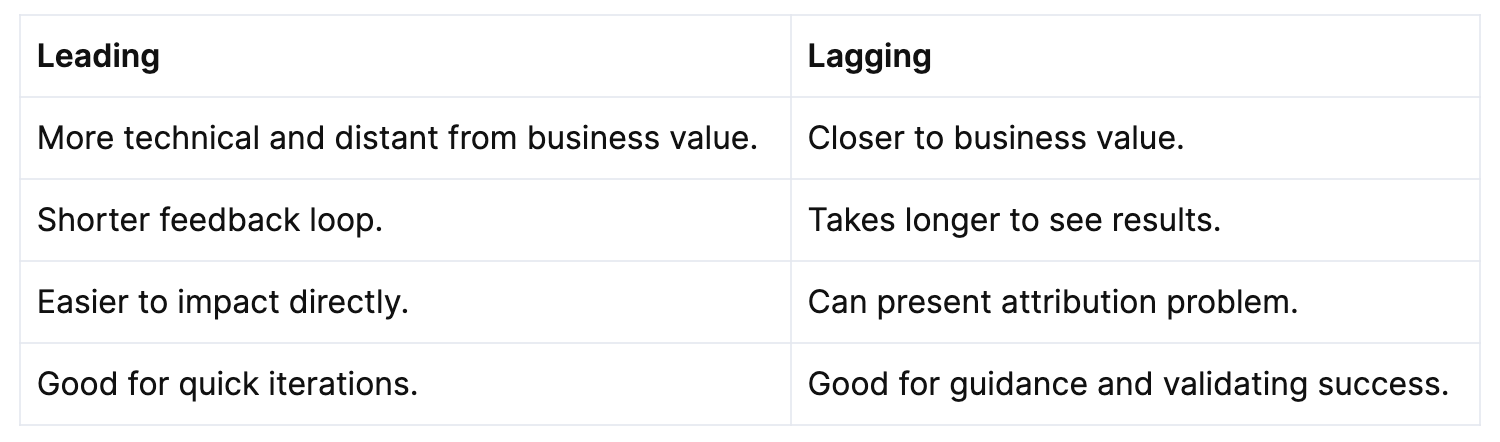

A simplistic definition is that leading indicators will hint you about something that might happen in the future. While lagging can tell a story about what happened in the past. And they really clicked on my head when I started understanding what makes them like that:

Imagine that our customers are finding bugs frequently. And your development team, tired of testing manually, suggest introducing automated tests.

In this example test coverage can be a good candidate to be our leading indicator. One that the team can iterate over on a weekly basis. And this is because:

All of that sounds good. But notice what can happen if you pair it with a lagging indicator. One like escaped defects, the number of bugs found in production:

This last point is particularly important. In my experience, lagging indicators take longer to impact but are closer to business value, are a good representation of the current status the health of the project. They give you an overview, a long term baseline that you can improve over time.

One of the reasons for that is that they tend to be the symptom of multiple factors. There are many things that can affect escaped defects beyond a poor automation suite. Focusing on improving the ultimate goal will help you picking the right battles.

But this trait of lagging indicators being affected by multiple factors is also a challenge they have. If it moved, how do I know it is because of that specific change we made and not something else? There is an attribution problem. And I'm afraid that I don't have a silver bullet to that. You can rely on industry research, which might not apply to your specific case. Or experiment within your organisation to validate it yourself, but you might not have the means for that.

And these are the reasons why I believe that pairing a leading indicator to iterate quickly with a lagging indicator to guide us and validate success is a good practice.

I'm a fractional CTO that enjoys working with AI-related technologies. I have dedicated more than 15 years to serving SaaS companies. I worked with small startups and international scale-ups in Europe, UK and USA, including renowned companies like Typeform.

I now work helping startups achieving high growth and performance through best practices and Generative AI.